Jenkins CI - S3

Install the recommended plugins and create an Admin account in the following steps.

At this point you should be able to login an see the following page:Jenkins home page

Install Jenkins Plugins

At the Jenkins home page on the left menu select Manage Jenkins -> Manage Plugins select the tab Available and search for the following plugins:

Blue Ocean - New Jenkins UI

Pipeline AWS - AWS Integration

Create the Pipeline AWS Jenkins Credential

At the Jenkins home page on the left menu click at Credentials->System,select the scope global and at the left menu again Add Credential:Create AWS Credential

Provide the AWS IAM Credentials to allow Jenkins Pipeline AWS Plugin to access your S3 Bucket. At the above image, insert the created Access Key IDand the Secret Access Key. You can define and ID that will be used at the Jenkinsfile configuration as the following example:

The <Jenkins-Credential-ID> is the ID of the credential you’ve just created.

Blue Ocean Pipeline

Jenkins Blue Ocean UI makes it easier to create and configure a new pipeline.

Create a new pipeline and select GitHub as repository

Enter a Personal Access Token from GitHub to allow Jenkins to access and scan your repositories.

Follow the instructions and the Blue Ocean will look for a Jenkinsfile, if does not exists Blue Ocean allows you to define a new file and configure the Pipeline.

If you create a Pipeline with Blue Ocean it will commit the Jenkinsfile to your repository and it will have a structure similar to the following:Jenkinsfile pipeline example

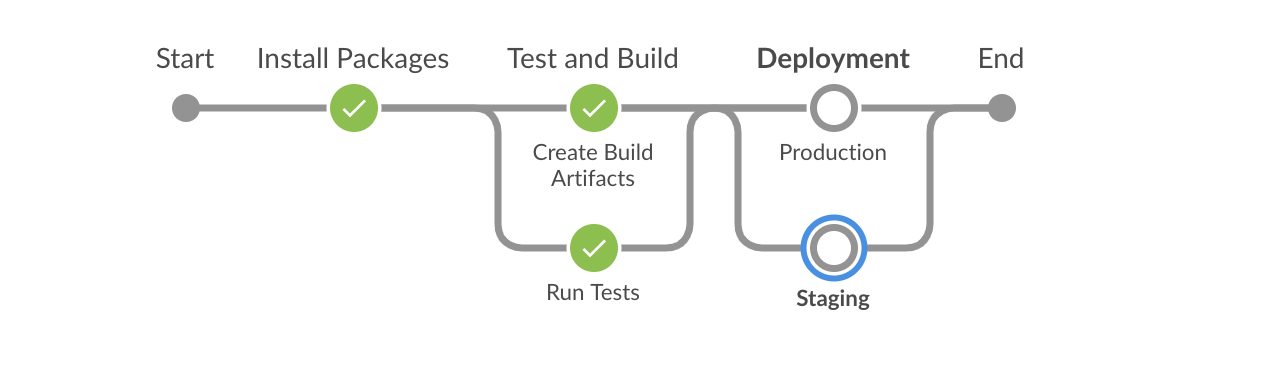

The above Jenkinsfile has a pipeline as you can see at the following image:Jenkins Blue Ocean Pipeline

The Pipeline above uses a docker agent to test the application and to create the final build files. The Deployment stage will remove the files from a given S3 bucket, upload the new build files and send an email to inform that a new deployment was successfully completed.

One thing to note is at the agent definition it used a port range is used to avoid the job to fail in case a given port is already in use

Setup the GitHub Webhook

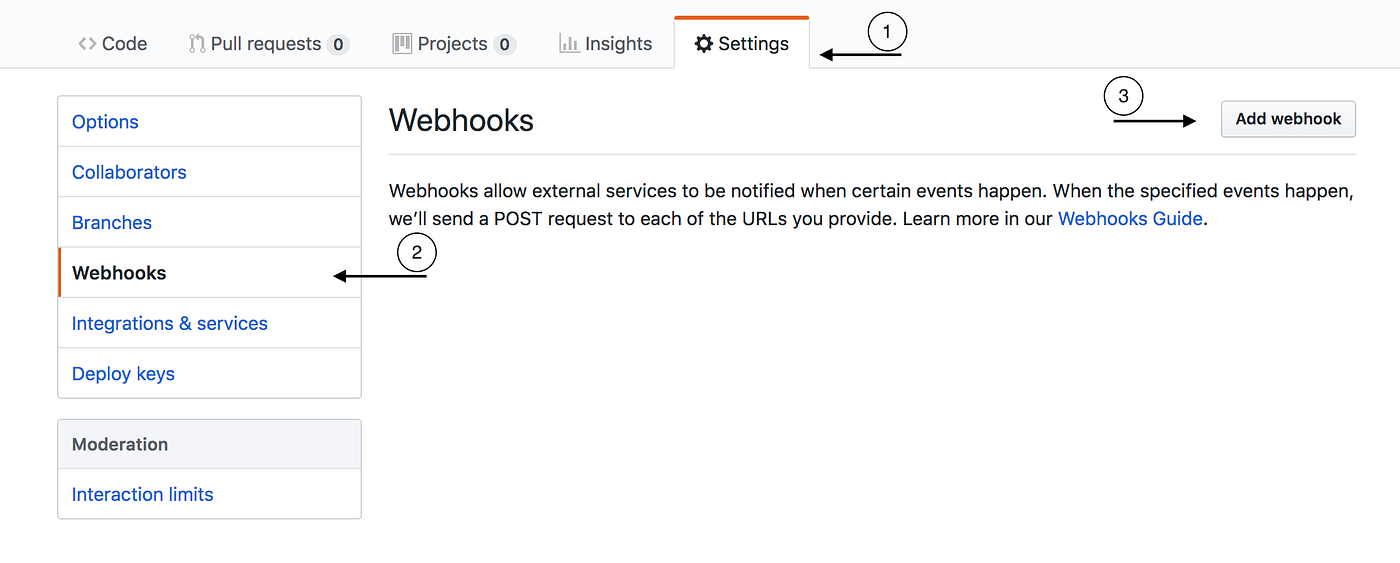

A WebHook is an HTTP callback: an HTTP POST that occurs when something happens; a simple event-notification via HTTP POST. A GitHub Webhook allows Jenkins to subscribe to repository events like Pushes and Pull Requests.

The Webhook will send a POST request to your Jenkins instance to notify that an action was triggered(PR, Push, Commit…) and it should run a new job over the code changes.

Go to you repository Settings, select Webhooks on the left menu and click on the button Add webhook.![]() GitHub Repository Settings — Webhooks

GitHub Repository Settings — Webhooks

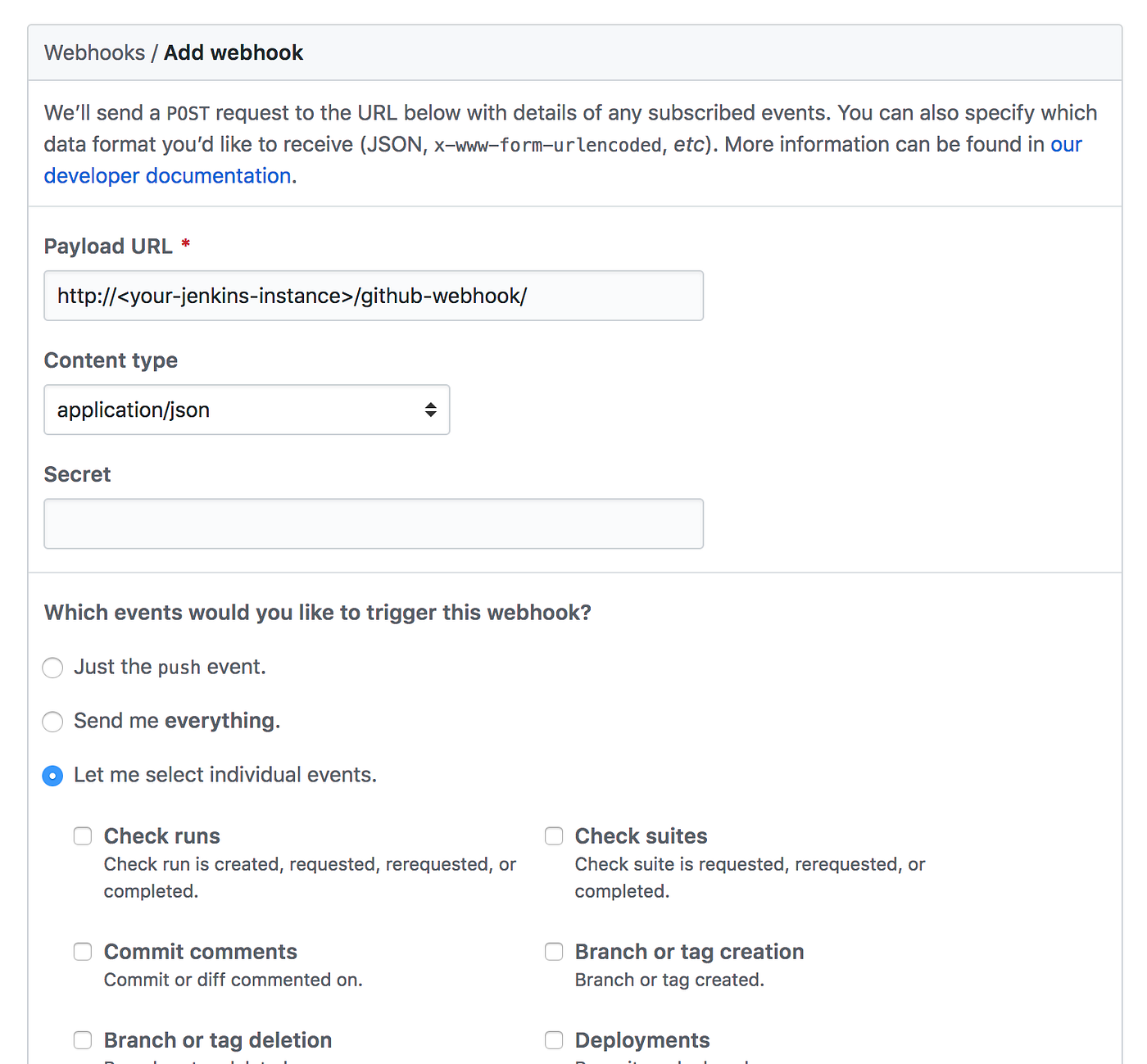

Set the Payload URL to http://<your-jenkins-instance>/github-webkook/and the Content type to application/json GitHub Repository Settings — Create a Webhook

GitHub Repository Settings — Create a Webhook

Select the individual events that you want to be used to trigger a new Jenkins build. I recommend to select Pushes and Pull Requests.

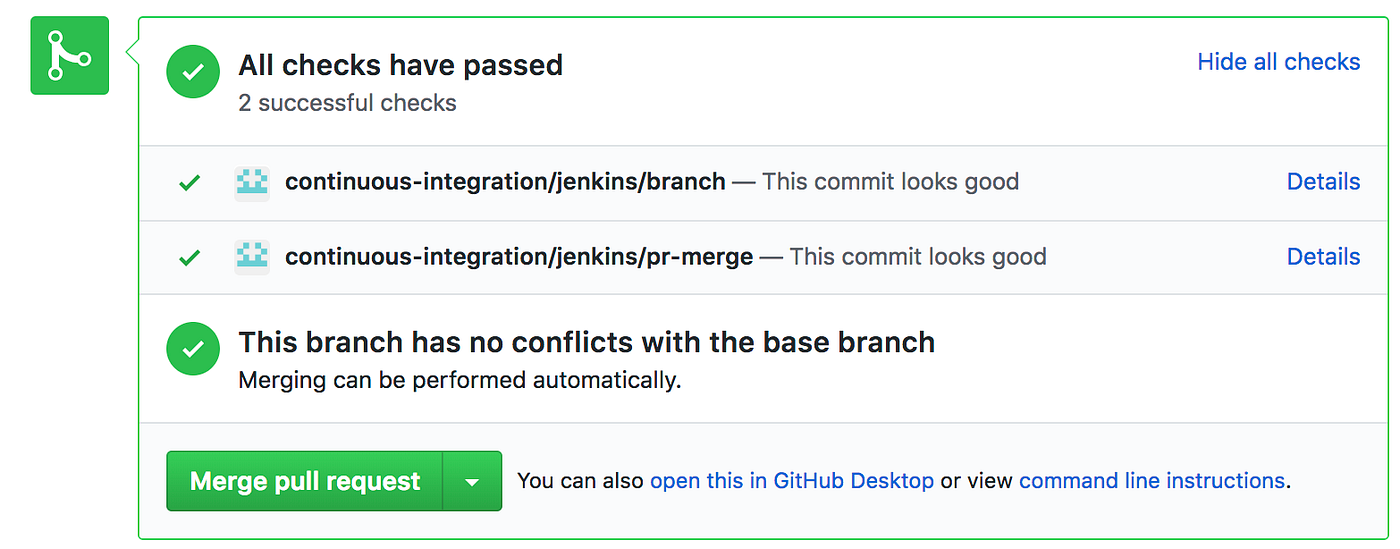

After that you will be able to see a similar message after a PR is created and it passes Jenkins validation:GitHub Pull Request Status

Create the S3 Bucket

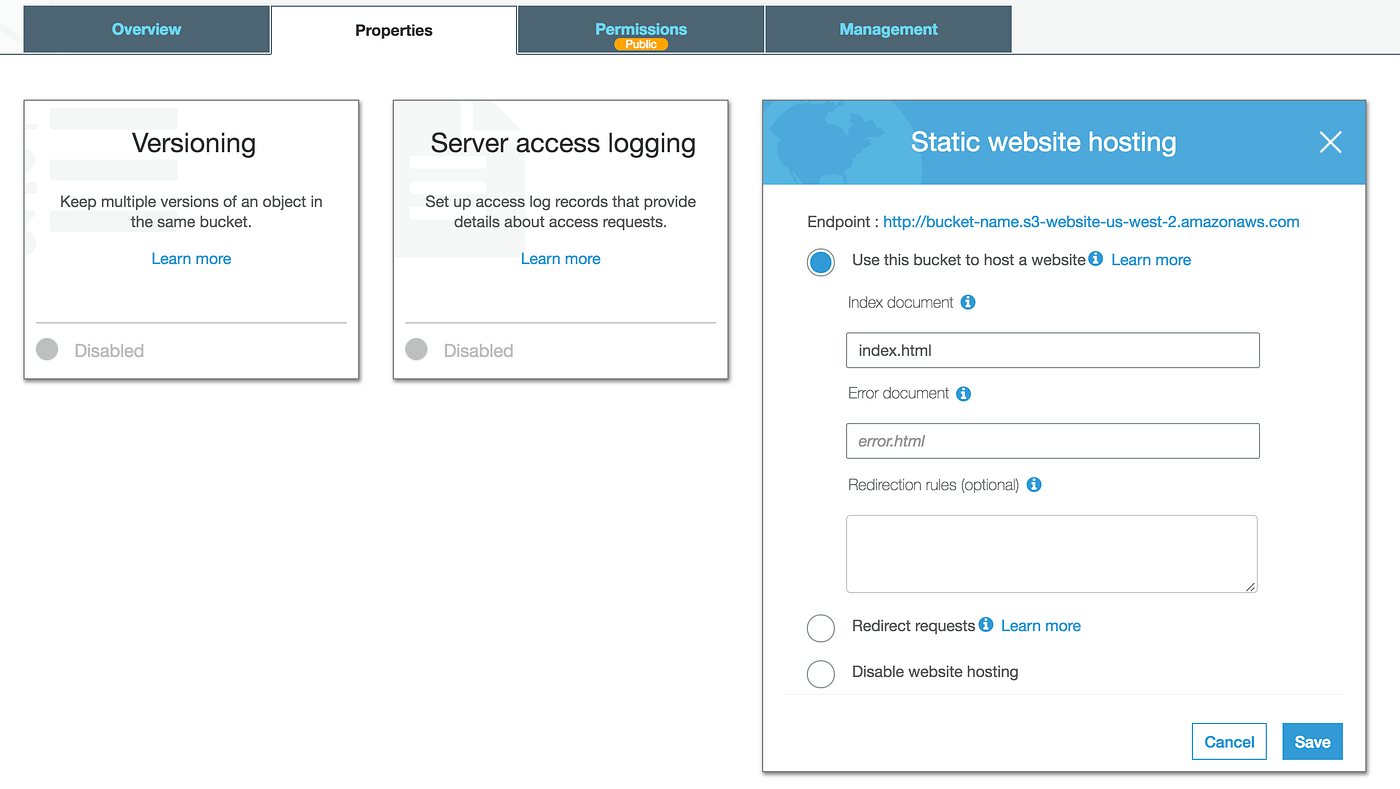

At your AWS console go to Services -> S3 and click the Create bucketbutton. Choose you bucket name and region, untick both options under the Manage public bucket polices for this bucket and finish the bucket creation. Open the bucket Properties, turn on the Static website hosting and define the index.html to point to your entry file like the following image: AWS S3 Bucket Properties — Static website hosting

To use a custom domain name you need to create a second bucket and under Static website hosting select the Redirect requests option. That option allows you to define the domain that will be used to access the hosting bucket. Bear in mind that this secondary bucket will use a HTTP status code 301 to redirect the requests.

At this point you should be able to access your website using you custom domain you via the bucket

Conclusion

This process has multiple pros and cons and I would like to drop my two cents on this and highlight a couple of them.

Pros

Cheaper process with a good performance of a CDN to deliver static content.

AWS handles the traffic so scalability won’t be an issue.

Cons

Cache! Updates may take a while to be propagated as it has to wait the cache to expire.

Server side rendering. Bots and crawler won’t be able to get any metadata.

Despite the cache, that can be handled by setting to the index.html a short maxAge, this solution can be considered robust and sustainable as it takes advantages of multiple AWS resources to make its availability and scalability to follow high standards as it is required nowadays.

Last updated